Buzzwords come and go in the business world, but one of the most persistent in recent years is the idea of a “digital twin.”

A digital twin is a virtual model of an object, system, or process. It can, for example, be used to represent the complexities of a building’s HVAC system or to model the electronic systems inside an aircraft.

We also often encounter the phrase alongside similarly broad terminology, like “digital transformation” or “digital process twin.” These terms offer the promise of revolutionizing your business process landscape and increasing operational efficiency. And in some contexts, digital twins can easily do that. For instance, they can prove valuable in optimizing processes inside a factory.

But if you’ve ever investigated the concept as a way to build a “digital twin of an organization” (DTO), what you found was likely a lot less dynamic and poorly defined.

Attempts to move from something like the factory floor to the more abstract, interconnected processes of a business, can often fall short. They revert to a static virtual representation rather than a living model of how the organization functions.

To put it bluntly, what you end up with is a map. Great, but now what? What do you do with it? How does this transform your organization and boost operational efficiency?

When the answers to those questions fail to materialize, many business leaders shelve the whole digital twin idea in favor of a more definable strategy.

While that’s understandable, it’s also a massive missed opportunity.

When done right, building up a DTO can help your company realize tremendous gains — improved productivity, reduced cost, and compliant operations.

So, What Does a True DTO Look Like?

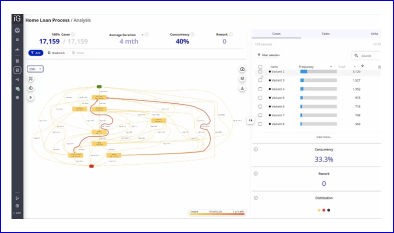

A true DTO is more than a flat map of your processes. It’s a living, breathing, virtual recreation. It allows you to predict and manage process outcomes and track real-time performance.

In this context, defining what individual (or multiple) processes look like is only the first step. A DTO should also empower you to measure and refine process performance. You should be able to simulate and predict process changes before investing in them in real life.

To achieve that, you need a platform that delivers process intelligence to compliment your people and processes. You also need a clear roadmap for how to use it to unlock deep process value.

Three Steps to Building a Comprehensive Digital Twin of Your Organization

In broad terms, building a DTO can be broken down into three steps. Each section below summarizes one of these steps and the tools and capabilities you’ll need to create a digital twin that adds real-world business value.

Step One: Discover

The first step is to document and virtually recreate how your process (or processes) run in real life. This includes individual process steps to model an end-to-end process function, as well as the connections and interdependencies with other processes. On top of the what and how, you’ll want to also cover the who, why, and when.

This involves leveraging both process mining and process modeling. With modeling, you can establish all of the people associated with a process, define RACI roles, the systems used, regulations to comply with, and any other metadata that’s relevant to the process.

You’re able to automatically generate process maps from the digital breadcrumbs that your IT systems are already producing. You can continue to enrich by modeling off-system process steps.

Ideally, the information you capture at this stage will reside in a central process repository. By keeping all the process information in one place, you can organize and document processes to understand each one and their connections easier. In other words, you can see your processes in context.

And that’s not even the best part. At this point, it’s still the same boring static model that we described at the beginning. Process mining also provides insights about how the process is running in reality. You can measure how fast, efficient, and compliant the process really is. And even better yet, you can analyze the root-causes of inefficiencies, both those that you know you were looking for, as well as those you weren’t. (It’s absolutely mind-boggling how many companies have no idea they’re systematically paying invoices twice)

Remember that, realistically, most companies won’t have a perfect sense of how their processes function right from the start. They also won’t have every process mapped out, ready for the next steps. The goal is to build towards a more complete picture of your process landscape.

At this point we know how our processes work today and in what context. We know how they’re performing, where the bottlenecks are, and the root causes behind those bottlenecks. It’s finally time to move from the “as-is” to the “to-be”.

Step Two: Design

With this new perspective, you can begin to formulate answers to questions like: How can the business improve this process? And: How can problems be avoided?

You want to create blueprints for future changes using all the information you obtained. This should be a collaborative exercise. Center of excellence, risk & compliance, and line of business teams all have relevant inputs for designing future state processes.

At this point you should be asking what results will actually come from improving the process? Your executives and stakeholders will be asking: “How much more efficient can it be? How much less can it cost? Will it comply with external policy and external regulation?”

Enter process simulation, the key to quantifying and qualifying future process performance based on proposed changes. Using the data gathered from process mining, you already have an accurate baseline. This is crucial, because an accurate baseline will give you confidence that the predicted impacts of the changes are reliable.

You simulate multiple process improvement options and compare the results of each. For each option, you compare the benefits (productivity gains, reduced cost, compliant operations) against the cost of implementing them (people, technology).

It doesn’t matter if the ultimate decision-makers want quick wins or more strategic changes, you’re able to easily articulate what the tradeoffs of each are.

Step Three: Optimize

Step three is to formalize the ideas laid out in the last two stages into actionable changes. Your process can now be refined based on your data and “what if” simulations, or even overhauled completely.

Some of these improvements happen outside of the digital twin such as hiring new people. But most of them will be digital-native, such as process automation. It was likely presented as an improvement option to increase productivity and reduce human error.

Regardless of the change, everyone affected needs to be aware. Digital twin technology should include mechanisms for review and approval cycles as well as version control. As the process changes, process participants are notified and confirm that they have understood the changes. This helps with two more important aspects of transformation, change management and audit.

Finally, you need to monitor and measure the performance of your new process. Is it performing as expected? If not, what hasn’t been accounted for? And how can the process be improved? This brings us back to discover step again, for a cycle of continuous improvement.

Realizing Business Value With a Digital Twin of an Organization

By implementing process changes in this systematic way, the work of building your organization’s DTO itself becomes iterative. Instead of a monumental task to be tackled all at once, building a digital twin of your organization becomes about recreating the process landscape one section at a time. By asking the right questions at each stage, the results are much more likely to drive true business value.

However, leveraging the full suite of process intelligence technology is crucial. Without simulation and design capabilities, the usefulness of the data you gain from process mining is limited. Without the ability to automate troublesome segments of processes, it becomes more challenging to handle risk and performance issues.

Combining these capabilities also makes it far easier to monitor how changing one aspect of your process affects other factors downstream. For example, what are the knock-on effects for cost if you optimize a process for throughput and customer experience? Simulating the process change, implementing it, and monitoring the results provides a clear-cut answer.

All of this adds up to an approach to building your DTO that adds clearly defined, measurable value at every step, instead of vague promises of organizational efficiency. It’s an approach I like to think of as: “Less magic, more science.” And a more rewarding journey to true process excellence.

Interested in learning more about how iGrafx can help you jump-start your organization’s process maturity? Try a free demo of our Process360 Live platform to enable seamless process optimization with process mining, process design, analysis, simulation, and process automation.